OpenAI reported another leader generative artificial intelligence model on Monday that they call GPT-4o — the "o" means "omni," alluding to the model's capacity to deal with text, discourse, and video. GPT-4o is set to carry out "iteratively" across the organization's designer and shopper confronting items over the course of the following couple of weeks.

OpenAI CTO Mira Murati said that GPT-4o gives "GPT-4-level" knowledge yet enhances GPT-4's capacities across various modalities and media.

"GPT-4o reasons across voice, message and vision," Murati said during a streamed show at OpenAI's workplaces in San Francisco on Monday. "Also, this is staggeringly significant, on the grounds that we're checking out at the fate of communication among ourselves and machines."

GPT-4 Super, OpenAI's past "driving "generally progressed" model, was prepared on a blend of pictures and text and could break down pictures and text to achieve undertakings like separating text from pictures or in any event, depicting the substance of those pictures. Yet, GPT-4o includes discourse.

What does this enable? A variety of things.

GPT-4o significantly works on the involvement with OpenAI's simulated intelligence controlled chatbot, ChatGPT. The stage has long offered a voice mode that interprets the chatbot's reactions utilizing a text-to-discourse model, yet GPT-4o supercharges this, permitting clients to collaborate with ChatGPT more like a partner.

For instance, clients can ask the GPT-4o-fueled ChatGPT an inquiry and intrude on ChatGPT while it's replying. The model conveys "continuous" responsiveness, OpenAI says, and could get on subtleties in a client's voice, accordingly creating voices in "a scope of various emotive styles" (counting singing).

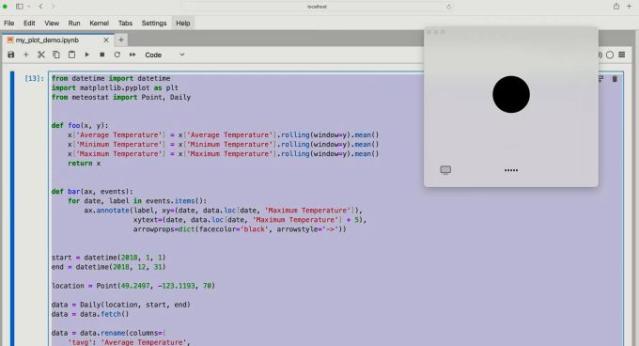

GPT-4o likewise overhauls ChatGPT's vision capacities. Given a photograph — or a work area screen — ChatGPT can now rapidly respond to related questions, from themes going from "What's happening in this product code?" to "What brand of shirt is this individual wearing?"

These highlights will advance further from here on out, Murati says. While today GPT-4o can take a gander at an image of a menu in an alternate language and decipher it, later on, the model could permit ChatGPT to, for example, "watch" a live sporting event and clear up the guidelines for you.

"We realize that these models are getting increasingly complicated, however we believe the experience of association should really turn out to be more regular, simple, and for you not to zero in on the UI by any means, but rather center around the coordinated effort with ChatGPT," Murati said. "For the recent years, we've been exceptionally centered around working on the mental prowess of these models … However this is whenever that we first are truly making a tremendous step in the right direction with regards to the usability."

GPT-4o is more multilingual also, OpenAI claims, with improved execution in around 50 dialects. Also, in OpenAI's Programming interface and Microsoft's Sky blue OpenAI Administration, GPT-4o is two times as quick as, a portion of the cost of and has higher rate limits than GPT-4 Super, the organization says.

As of now, voice isn't a piece of the GPT-4o Programming interface for all clients. OpenAI, refering to the gamble of abuse, says that it plans to initially send off help for GPT-4o's new sound capacities to "a little gathering of confided in accomplices" before very long.

GPT-4o is accessible in the complementary plan of ChatGPT beginning today and to endorsers of OpenAI's superior ChatGPT In addition to and Group plans with "5x higher" message limits. (OpenAI noticed that ChatGPT will naturally change to GPT-3.5, a more seasoned and less competent model, when clients hit as far as possible.) The superior ChatGPT voice experience supported by GPT-4o will show up in alpha for In addition to clients in the following month or somewhere in the vicinity, close by big business centered choices.

In related news, OpenAI declared that it's delivering a revived ChatGPT UI on the web with a new, "more conversational" home screen and message design, and a work area variant of ChatGPT for macOS that allows clients to pose inquiries by means of a console easy route or take and examine screen captures. ChatGPT In addition to clients will gain admittance to the application first, beginning today, and a Windows form will show up later in the year.

Somewhere else, the GPT Store, OpenAI's library of and creation devices for outsider chatbots based on its artificial intelligence models, is presently accessible to clients of ChatGPT's complementary plan. What's sans more clients can exploit ChatGPT highlights that were previously paywalled, similar to a memory capacity that permits ChatGPT to "recollect" inclinations for future communications, transfer documents and photographs, and quest the web for replies to ideal inquiries.